Security Log Analysis And Machine Learning On Hadoop(Part 1 - Ingestion)

Author: Sean Moriarty

Email: smoriarty21@gmail.com

Project Link: http://www.hackthecause.info

For my most recent venture I have been working on using the hadoop ecosystem to setup a sandbox for analyzing log data. I chose to use hadoop for log storage for a few reasons. The most dominant of these reasons being that hadoop is what I use for work so I grab any excuse I can to get another install under my belt. On top of this hadoop provides a great set of tools for streaming, storing and analyzing log data. It also provides cheap easy scalability and storage. On the off chance that I generate terabytes of log data I can easily expand my storage capacity by adding a new datanode to my cluster.

For my environment I went with the hortonworks stack on live and a raw hadoop install for my development environment. I chose hortonworks for a few reasons. With my work I have had to install and manage hortonworks, mapr and cloudera stacks. I find mapr to be by far the fastest stack but due to it being much more resource intensive than its competitors I felt that was not the right way to go. I do like cloudera a lot but I tend to prefer ambari over CDH and I can't help but love supporting hortonworks due to their heavy dedication to open source, maintaining high quality code and assembling a great team of engineers.

For ingestion of the log data I use flume. Flume is a great Apache project for real time data ingestion. It runs as agents you setup on one or more machines. Each agent consists of a source, channel and sink. The source is just as it sounds, your data source. The channel is a passive store that keeps the event until it is sent to your sink. The sink is your datas final resting place within the agent. This may be writing your data to HDFS or passing the data to another agent via avro. For a more detailed description of flume go here:For my environment I went with the hortonworks stack on live and a raw hadoop install for my development environment. I chose hortonworks for a few reasons. With my work I have had to install and manage hortonworks, mapr and cloudera stacks. I find mapr to be by far the fastest stack but due to it being much more resource intensive than its competitors I felt that was not the right way to go. I do like cloudera a lot but I tend to prefer ambari over CDH and I can't help but love supporting hortonworks due to their heavy dedication to open source, maintaining high quality code and assembling a great team of engineers.

In my setup I have two agents running. My first agent sits on the web server and listens for new entries in my logs. This then sends the data to an avro sink which passes it off to an agent running on my hadoop cluster. The agent on the cluster is configured with an avro source and an hbase sink. This means when the agent receives data into its avro source from the web server's agent it will write the entry to hbase for long term storage. I also write the data into HDFS as a second archive should anything ever happen to hbase. Below is the configuration file for the agent sitting on the web server.

The above is a basic script that tails a log file and listens for new entries. When a new entry is detected it is passed into memory as that is the channel type we have specified. The data is then sent out over our avro sink and cleared from memory. On the hadoop side our agent is configured to listen for the avro event being passed, write the data passed over the wire via avro into memory and send the data to our sinks. In this configuration I have two sinks. One writing the data onto HDFS as a flat file and one writing the data to hbase. The configuration for this end is shown below.

In a production environment you would want to add one more step to your agent that dumps data into hbase. This step is serializing your data. In the interest of speed I have broken my data up in python. The correct means of data ingestion would be writing a serializer class in java that will split log entries before inserting into hbase and place all data in the proper columns. Ingesting your data this way saves you from having to break the data up anytime you want to use it. For now I have written a quick python script that extracts all the data from my log entry and writes the entries to hive properly split up. This gives me a static schema making it much easier to access my data. I then connect to hive for creating visualizations and querying data to pass off to a machine learning algorithm.

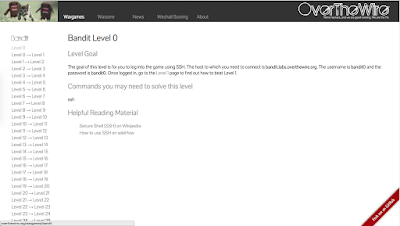

With a working pipeline for streaming my logs it was now time to generate some data. I decided to throw together a small CTF style game and allow the world to play. Right now it has six levels ranging from easy to moderate difficulty. This method turned out to work very well generating a little over 1.2 million log entries in the first 4 days. The site can be found here:

Check out the site and give the challenges a try to have your log entries added to the data pool. In part two I will discuss modeling my data to maximize the quality of information we can get from it. I will be talking about taking our modeled data and running it through a clustering algorithm in order to find patterns and more importantly anomalies in out data. We will learn about looking at a users data in a manner that will give us a feel for who they are and what they are doing in our system. All IP and related info will be blacked out in screenshots. All log data collected will be used internally only and will never be shared.

There are lots of information about hadoop have spread around the web, but this is a unique one according to me. The strategy you have updated here will make me to get to the next level in big data. Thanks for sharing this.

ReplyDeleteHadoop training in adyar

Hadoop training institute in adyar

Hadoop course in adyar

I was just wondering how I missed this article so far, this is a great piece of content I have ever seen in the entire Internet. Thanks for sharing this worth able information in here and do keep blogging like this.

ReplyDeleteHadoop Training Chennai | Big Data Training Chennai | Big Data Training in Chennai

Thanks! Im glad you found it helpful

DeleteThank you so much for sharing this worthwhile to spent time on. You are running a really awesome blog. Keep up this good work

ReplyDeleteHadoop Training in Chennai

You have provided an nice article, Thank you very much for this one. And i hope this will be useful for many people.. and i am waiting for your next post keep on updating these kinds of knowledgeable things...

ReplyDeleteHadoop Training

Really wonderful blog for those who want to explore more on this technology.

ReplyDeleteselenium training in tambaram

selenium training in adyar

Selenium Training in Chennai

iOS Training in Chennai

French Classes in Chennai

Big Data Training in Chennai

web designing training in chennai

Loadrunner Training in Chennai

Qtp training in Chennai

Thanks for your efforts in sharing this effective tips to my vision. kindly keep doing more. Waiting for more updates.

ReplyDeleteComputer Center Franchise

Education Franchise Opportunities In India

Top Education Franchises

Best Education Franchise In India

Training Franchise Opportunities In India

Language School Franchise

English Language School Franchise

I am really enjoying reading your well written articles.

ReplyDeleteIt looks like you spend a lot of effort and time on your blog.Keep Doing.

Data Science Training Institutes in Bangalore

Data Science Certification Bangalore

best analytics courses in bangalore

best data analytics courses in bangalore

big data analytics certification in bangalore

Thanks for sharing this article, really helpful

ReplyDeletedailyconsumerlife

Technology

Very Nice Website

ReplyDeleteSee Here

안전토토사이트

Thanks for sharing this blog. This very important and informative blog

ReplyDeletesap mm training in bangalore

sap mm courses in bangalore

sap mm classes in bangalore

sap mm training institute in bangalore

sap mm course syllabus

best sap mm training

sap mm training centers

There are lots of information about hadoop have spread around the web, but this is a unique one according to me. The strategy you have updated here will make me to get to the next level in big data. Thanks for sharing this.

ReplyDeletesap training in chennai

sap training in omr

azure training in chennai

azure training in omr

cyber security course in chennai

cyber security course in omr

ethical hacking course in chennai

ethical hacking course in omr

Wow it is really wonderful and awesome thus it is very much useful for me to understand many concepts and helped me a lot. it is really explainable very well and i got more information from your blog.

ReplyDeleteamazon web services aws training in chennai

microsoft azure training in chennai

workday training in chennai

android-training-in chennai

ios training in chennai

It was really nice blog with lot of innovative thing inside this, I really enjoyable i would like to thank for sharing this valuable content. I was so glad to see this wonderful blog. keep updating this type of blog.amazon web services aws training in chennai

ReplyDeletemicrosoft azure training in chennai

workday training in chennai

android-training-in chennai

ios training in chennai

You guys will be greatful to know that our institution is conducting online CS executive classes and a free CSEET classes only for you guys. If anyone is interested then feel free to contact us or visit our website for more details https://uniqueacademyforcommerce.com/

ReplyDeletenices blog thanku so much this information.

ReplyDeletefree classified submission sites list

KISHorsasemahal

If You Own A Forex Account, IC Market Is A Tool That You Need To Add To Your Trading Portfolio.

ReplyDeleteDo You Now AximTrade Review Login Is A Secure, Multi-channel, Multi-factor Authentication System, Enabling Customers To Securely Access Their Accounts To Fund/deposit, Request Withdrawal, Update Or Manage Their Profile And More.

ReplyDeletecash queen

ReplyDeleteNice Blog

ReplyDeleteJewellery Billing Software

Jewellery Billing Software